As part of NASA’s Frontier Development Lab, we developed an AI algorithm which enhanced images of permanently shadowed regions on the Moon, allowing us to see into these extremely dark regions with high resolution for the first time.

What is a permanently shadowed region?

Whilst sunlight reaches the majority of the lunar surface, there are some places on the Moon which are covered in permanent darkness. These are called Permanently Shadowed Regions (PSRs) and they are located inside craters and other topographical depressions around the lunar poles.

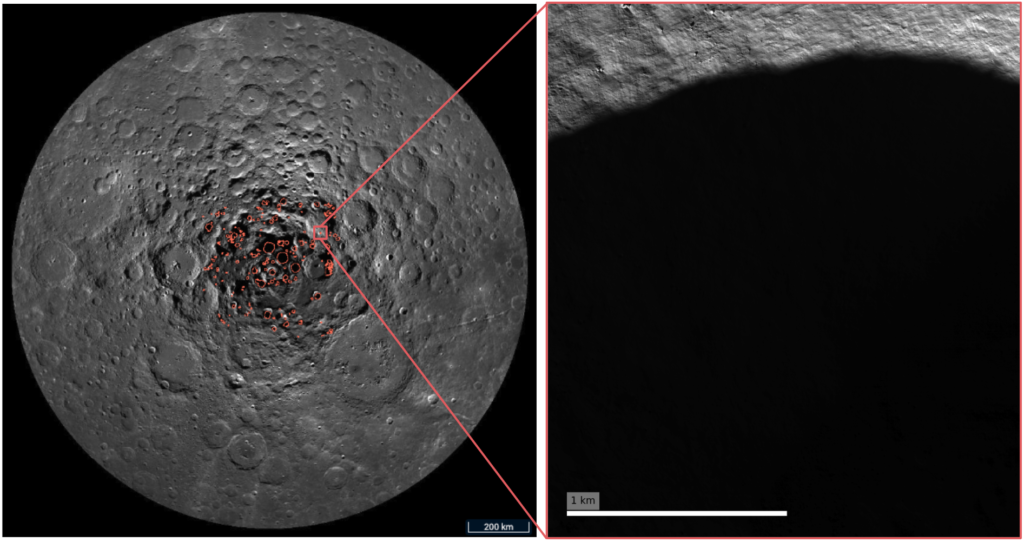

At these high latitudes, and because of their shape, no direct sunlight is able to enter PSRs. An example is the Shackleton crater at the lunar south pole (shown in this post’s feature image).

Permanently shadowed regions are amongst the coldest places in the solar system, with temperatures reaching below minus 200 degrees Celsius (minus 330 Fahrenheit, or close to absolute zero). Because of these extreme conditions, PSRs have been mostly unexplored to date.

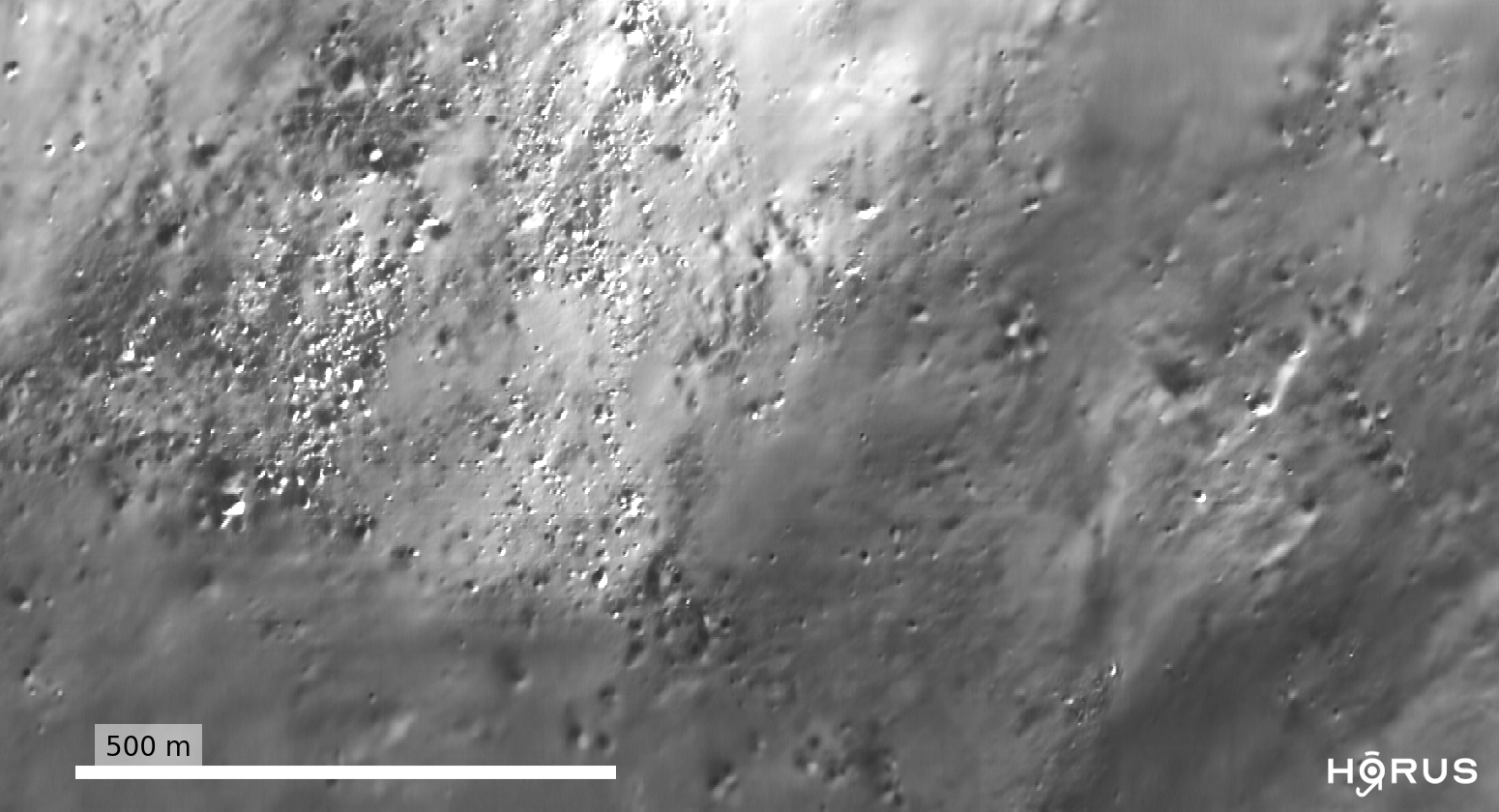

Right: Lunar Reconnaissance Orbiter Narrow Angle Camera image of the Wapowski crater, which hosts a PSR. To see into the shadowed region, we first have to dramatically adjust the contrast of the image.

Image credits: LROC/GSFC/ASU/QuickMap

Searching for water

Whilst being enigmatic, PSRs are prime targets for science and exploration missions. We think they could trap water in the form of ice, which is essential for sustaining our presence on the Moon. Multiple missions are planned to study PSRs in the future; for example NASA’s VIPER rover will be driving into a PSR to search for water in 2023.

The problem is, because of their darkness, it is very difficult to see what PSRs contain. This makes it very hard to plan rover and human traverses into these regions, or to directly identify water inside of them.

Seeing in the dark

It turns out that PSRs are not completely dark. Whilst they do not receive direct sunlight, extremely small amounts of sunlight can enter PSRs by being scattered from their surroundings. Thus, we can still take photographs of PSRs, but these images usually have very poor quality.

Just like when you take a picture at night on your mobile phone, current state-of-the-art high resolution satellite images from the Lunar Reconnaissance Orbiter Narrow Angle Camera of PSRs are grainy and full of noise and this stops us making any meaningful scientific observations.

Fundamentally, this is because of the very low numbers of photons arriving at the camera. In this extreme, low-light regime, photons arrive with a large amount of randomness, producing the “graininess” in the image, and noise artefacts from the camera itself also dominate the images.

Night vision on the Moon: enhancing images of PSRs with machine learning

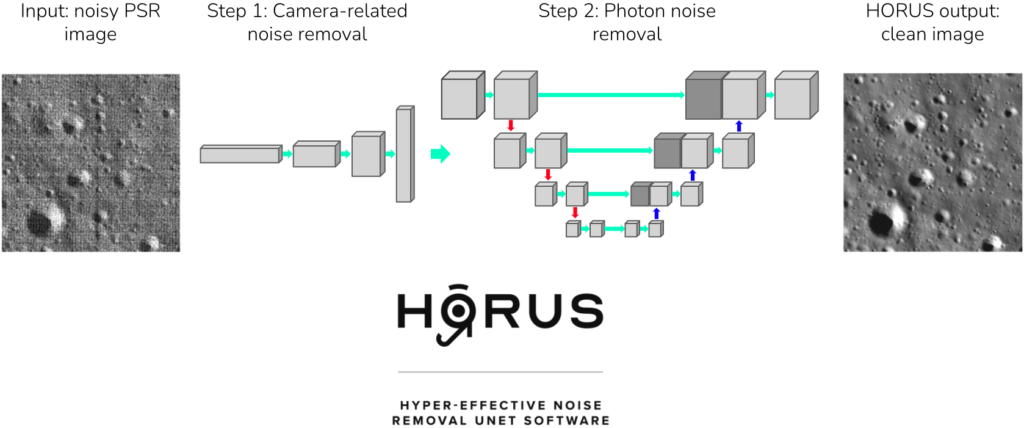

To overcome this problem, we used machine learning to remove the noise in these images. Our algorithm consists of two deep neural networks which target different noise components in the images. The first network removes noise generated by the camera, whilst the second network targets photon noise and any other residual noise sources in the images.

To help our algorithm learn, we developed a physical noise model of the camera and used it generate realistic training data. We also used real noise samples from calibration images, as well as 3D ray tracing of PSRs to inform our training. We named our approach HORUS, or Hyper Effective nOise Removal U-Net Software.

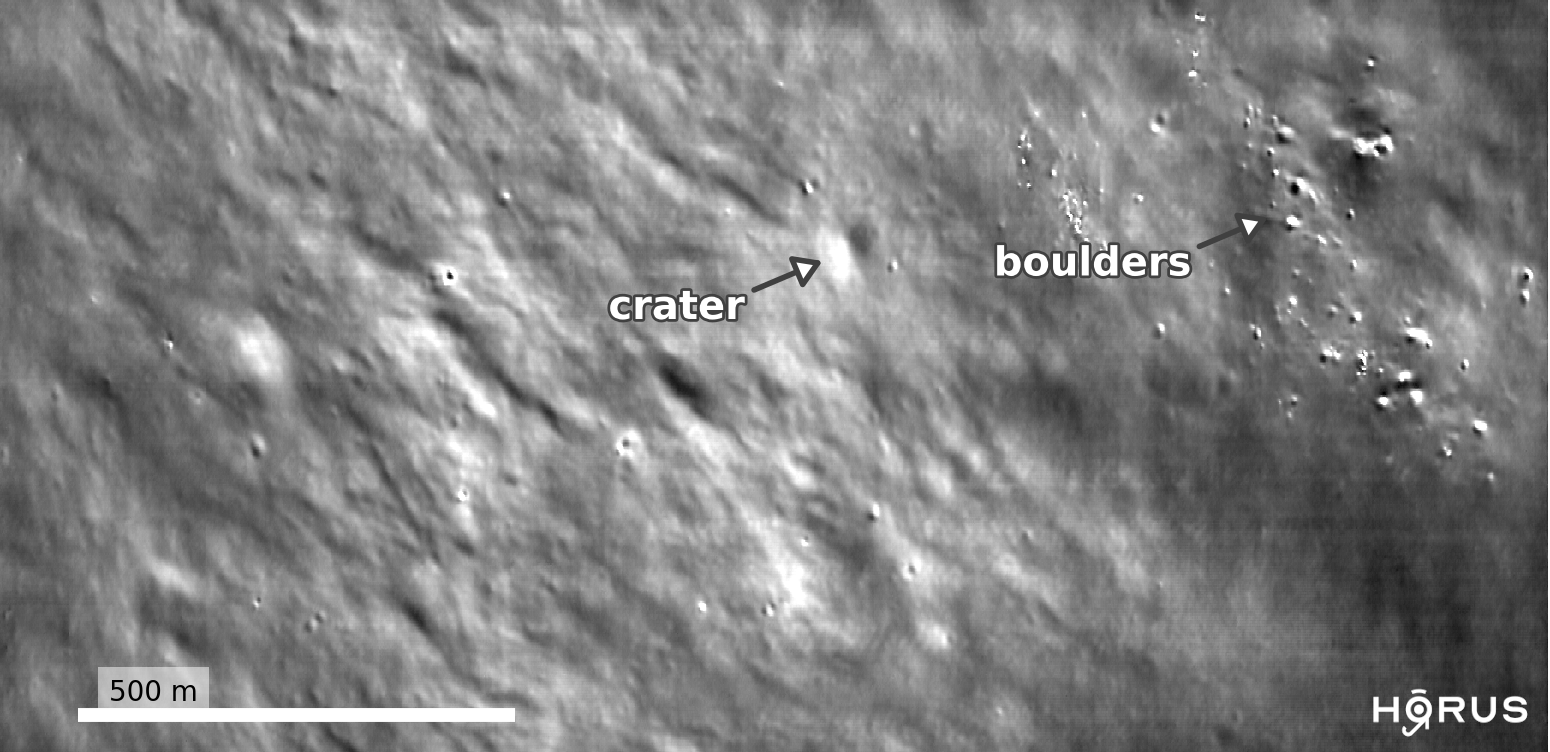

The interactive sliders in this post show images of PSRs before and after applying HORUS. We find HORUS is able to remove large amounts of noise from these images, significantly improving their quality. Smaller craters, boulders and other geological features are revealed, making the scientific analysis and interpretation of these regions much easier.

What’s the impact?

With more analysis, our work could potentially pave the way for the direct detection of water ice or related surface features in these regions. It could also inform future rover and human scouting missions, allowing us to plan traverses into PSRs with more certainty. Our goal is to significantly impact humanity’s exploration of the Moon, helping to enable a sustainable, long term presence on the Moon and beyond.

Read the full CVPR 2021 paper here.

The team: Ben Moseley, Valentin Bickel, Ignacio Lopez-Francos, Loveneesh Rana

Our mentors: Miguel Olivares-Mendez, Dennis Wingo, Allison Zuniga

Other contributors: Nuno Subtil, Eugene D’Eon

Thanks to Leo Silverberg for designing the awesome HORUS logo.

Feature image credits: NASA’s Scientific Visualization Studio

Awesome Ben!

Are there more information regarding about this topic for us to research for? Thank you!